Building Innovative GenAI Applications with the GenAI Stack: Unleashing the Power of Docker

In the fast-evolving landscape of artificial intelligence, Generative AI (GenAI) is at the forefront, opening up exciting opportunities for developers and businesses. One of the most significant challenges in GenAI development is creating a robust, efficient, and scalable infrastructure that harnesses the power of AI models. To address this challenge, the GenAI Stack has emerged as a game-changer, combining cutting-edge technologies like Docker, LangChain, Neo4j, and Ollama. In this article, we will delve into the intricacies of these technologies and explore how they work together to build innovative GenAI applications.

Understanding the GenAI Stack

Before we dive into the technical details, let’s establish a clear understanding of what the GenAI Stack is and what it aims to achieve.

The GenAI Stack is a comprehensive environment designed to facilitate the development and deployment of GenAI applications. It provides a seamless integration of various components, including a management tool for local Large Language Models (LLMs), a database for grounding, and GenAI apps powered by LangChain. Here’s a breakdown of these components and their roles:

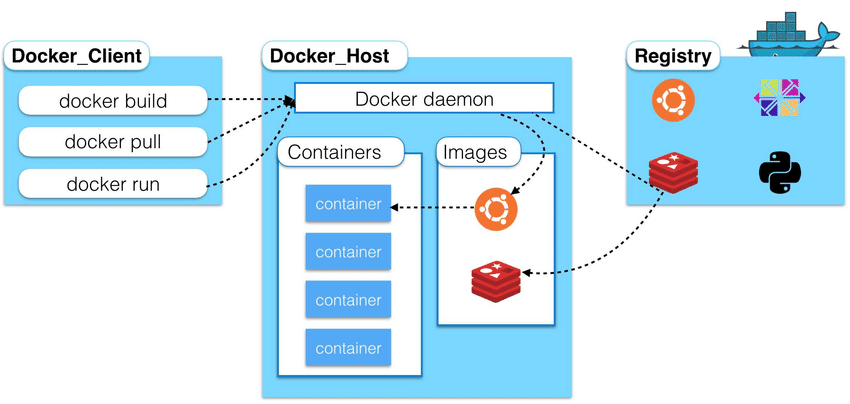

Docker: Docker is a containerization platform that allows developers to package applications and their dependencies into containers. These containers are lightweight, portable, and provide consistent runtime environments, making them an ideal choice for deploying GenAI applications.

LangChain: LangChain is a powerful tool that orchestrates GenAI applications. It’s the brains behind the application logic and ensures that the various components of the GenAI Stack work harmoniously together. LangChain simplifies the process of building and orchestrating GenAI applications.

Neo4j: Neo4j is a highly versatile graph database that serves as the backbone of GenAI applications. It provides a robust foundation for building knowledge graph-based applications. Neo4j’s graph database capabilities are instrumental in managing and querying complex relationships and data structures.

Ollama: Ollama represents the core of the GenAI Stack. It is a local LLM container that brings the power of large language models to your GenAI applications. Ollama enables you to run LLMs on your infrastructure or even on your local machine, providing more control and flexibility over your GenAI models.

Docker: The Containerization Revolution

Docker has revolutionized application deployment and management. It introduces the concept of containerization, allowing developers to bundle their applications and dependencies into containers. These containers are isolated and share the host operating system’s kernel, making them lightweight and efficient. Docker’s advantages include:

Portability: Containers can run on any platform that supports Docker, ensuring consistency across different environments.

Scalability: Docker’s container orchestration tools, such as Kubernetes, make it easy to scale applications horizontally.

Resource Efficiency: Containers consume fewer resources compared to traditional virtual machines, allowing for better resource utilization.

Source: Whizlabs

Source: Whizlabs

In the GenAI Stack, Docker is the foundation that ensures all components work seamlessly together, providing a consistent and controlled environment for GenAI applications.

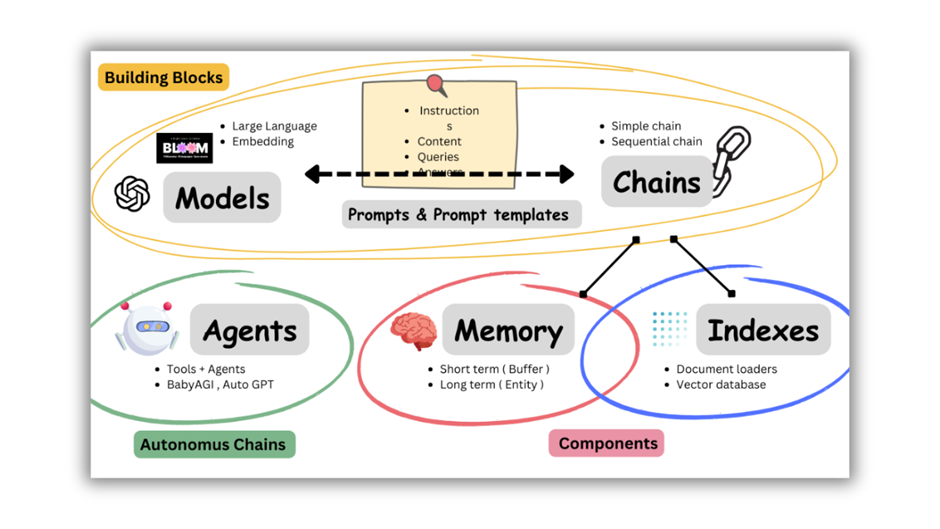

LangChain: Orchestrating GenAI Applications

LangChain is the orchestrator of GenAI applications within the GenAI Stack. It is designed to simplify the process of building, managing, and deploying GenAI applications. Key features of LangChain include:

Application Logic: LangChain houses the application logic in Python, allowing developers to create GenAI apps easily.

User Interface: LangChain leverages Streamlit for creating user interfaces, enabling developers to build interactive and user-friendly applications.

Docker Integration: LangChain seamlessly integrates with Docker, facilitating containerization and deployment of GenAI apps.

Development Environment: LangChain provides a development environment that supports rapid feedback loops, making it easier for developers to iterate on their applications.

Source: Packt

Source: Packt

LangChain is the bridge that connects various components of the GenAI Stack, ensuring that they work together cohesively to bring GenAI applications to life.

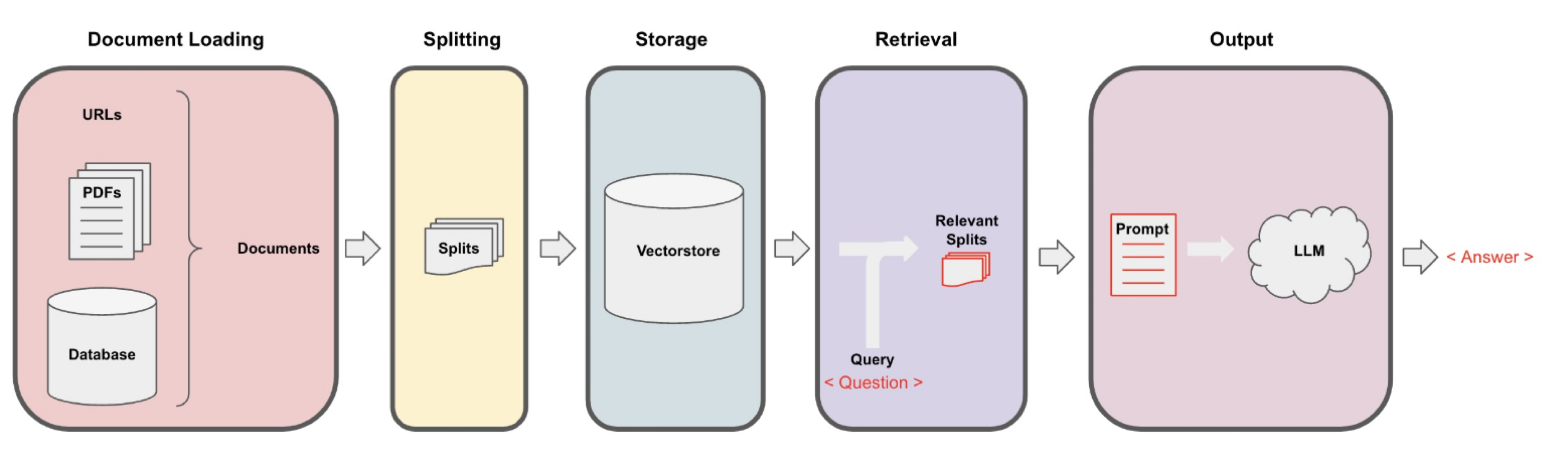

Neo4j: Powering Knowledge Graph-Based Applications

Knowledge graphs have become a pivotal component in GenAI applications. Neo4j, a graph database, plays a crucial role in managing the intricate relationships and data structures that underpin these applications. Key attributes of Neo4j include:

Graph Database: Neo4j stores and manages data in a graph format, making it ideal for applications that require intricate data relationships.

Querying Capabilities: Neo4j provides powerful querying capabilities, allowing developers to retrieve and manipulate data in a flexible and efficient manner.

Scalability: Neo4j can scale horizontally to accommodate growing data and application demands.

In GenAI applications, Neo4j serves as the foundation for creating knowledge graph-based applications. It allows developers to model and query complex relationships, ultimately enhancing the accuracy and relevance of GenAI responses.

Ollama: The Power of Local LLMs

Large Language Models (LLMs) are at the heart of GenAI applications. Ollama, an integral part of the GenAI Stack, brings LLMs to the local environment, offering more control and flexibility. Key advantages of Ollama include:

Open Source: Ollama is an open-source project, enabling developers to run LLMs without depending on external providers.

Data Control: Ollama allows developers to have complete control over data flows, storage, and sharing.

Local Deployment: Developers can run Ollama on their infrastructure or even on a local machine, making it a versatile choice for GenAI development.

Source: Ollama

Source: Ollama

Ollama represents a significant step forward in GenAI development by providing a seamless solution for setting up and running local LLMs, removing dependencies on external providers, and offering more control over the data flow.

Conclusion

The GenAI Stack powered by Docker, LangChain, Neo4j, and Ollama is a formidable combination for building innovative GenAI applications. It simplifies the development process, provides the infrastructure for knowledge graph-based applications, and empowers developers with local LLM capabilities. With these technologies at your disposal, you can create GenAI applications that are accurate, relevant, and highly customizable.

As the GenAI landscape continues to evolve, the GenAI Stack is a beacon of innovation, enabling developers to unlock the full potential of artificial intelligence. Whether you’re building chatbots, support agents, or knowledge retrieval systems, the GenAI Stack has the tools you need to make your GenAI applications shine.

It’s time to explore the possibilities, experiment with GenAI, and build the next generation of intelligent applications using the GenAI Stack. The future of GenAI is here, and it’s waiting for your creative ideas and innovations to transform it.

Disclaimer: The images and URLs in this blog are for illustrative purposes only and may not represent real services or websites.