Deep Dive into Context: MCP, A2A and RAG

RAG combines retrieval from external sources with LLM generation to produce informed responses. For instance, it retrieves documents from a vector store before prompting the model.

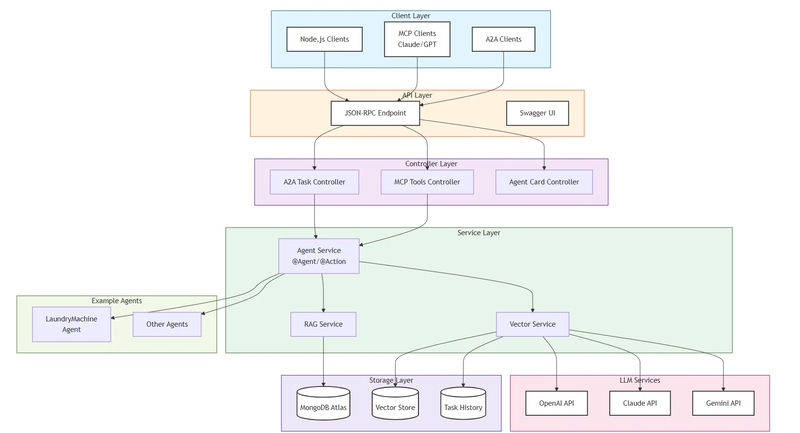

MCP, introduced by Anthropic, acts as a “USB-C for AI,” allowing models to dynamically access tools and data via a client-server model. It supports prompts, resources, and tools for contextual enhancement.

A2A, developed by Google, enables agents to exchange tasks and results over HTTP, using Agent Cards for discovery. It’s modality-agnostic, supporting text, images, and more.

Related terms include ReAct (reasoning + acting loop for decision-making) and ACP (local-first agent coordination, differing from A2A’s web-native focus).

Source: Internet

Source: Internet

Key Points

- RAG (Retrieval-Augmented Generation): Enhances AI responses by retrieving relevant external data before generating output, reducing hallucinations and incorporating up-to-date information. It seems likely that RAG is ideal for knowledge-intensive tasks like question-answering, though it may not handle real-time actions.

- MCP (Model Context Protocol): A standardized protocol for connecting AI agents to external tools, data sources, and prompts, enabling dynamic interactions. Research suggests MCP improves single-agent efficiency by providing a universal interface, but it focuses on tool access rather than multi-agent collaboration.

- A2A (Agent-to-Agent): An open protocol for AI agents to communicate, discover capabilities, and delegate tasks across systems. Evidence leans toward A2A fostering teamwork among agents, acknowledging potential challenges in coordination for complex, debated scenarios like multi-vendor integrations.

- Key Differences: RAG prioritizes knowledge augmentation, MCP enables tool integration for individual agents, and A2A facilitates inter-agent communication. These techniques complement each other, with no one-size-fits-all approach—RAG suits static data queries, MCP for action-oriented tasks, and A2A for collaborative workflows.

- Analogy for Easy Recall: Imagine solving a puzzle as a team. RAG is like consulting a reference book for missing pieces (knowledge retrieval). MCP is equipping yourself with tools like scissors or glue to manipulate pieces (tool access). A2A is discussing with teammates to share pieces and strategies (agent collaboration). This highlights how RAG provides info, MCP enables actions, and A2A promotes sharing.

Key Differences

| Aspect | RAG | MCP | A2A |

|---|---|---|---|

| Primary Focus | Knowledge retrieval & generation | Agent-tool/data integration | Inter-agent communication |

| Use Case | Q&A, summarization | Task automation, real-time data | Collaboration, task delegation |

| Interaction | Retrieve → Augment → Generate | Client → Server → Tool | Client Agent → Remote Agent |

| Strengths | Reduces hallucinations | Standardized access | Vendor-neutral scalability |

| Limitations | Static knowledge bases | Single-agent oriented | Requires network connectivity |

Python Code Examples

Here’s how to implement basic versions of each.

RAG Example (using LangChain):

from langchain_openai import OpenAIEmbeddings, ChatOpenAI

from langchain_community.vectorstores import Chroma

from langchain_core.prompts import PromptTemplate

from langchain_core.runnables import RunnablePassthrough

from langchain_core.output_parsers import StrOutputParser

# Sample documents

docs = ["Document 1 content...", "Document 2 content..."]

# Embed and store

embeddings = OpenAIEmbeddings()

vectorstore = Chroma.from_texts(docs, embeddings)

retriever = vectorstore.as_retriever()

# Prompt template

template = """Use the following context to answer the question:

{context}

Question: {question}

Answer:"""

prompt = PromptTemplate.from_template(template)

# LLM

llm = ChatOpenAI(model="gpt-4o-mini")

# Chain

chain = (

{"context": retriever, "question": RunnablePassthrough()}

| prompt

| llm

| StrOutputParser()

)

# Usage

response = chain.invoke("Your question here")

print(response)

This retrieves relevant docs, augments the prompt, and generates a response.

MCP Example (using FastMCP):

from fastmcp import FastMCP

mcp = FastMCP("Demo Server")

@mcp.tool("search_docs")

async def search_docs(query: str) -> str:

"""Search for documentation related to the query."""

return f"Results for: {query}"

@mcp.prompt("code_review")

async def code_review() -> str:

"""Return a template for code review."""

return "Review the following code for:\n- Bugs\n- Efficiency\n- Readability"

if __name__ == "__main__":

mcp.run()

This sets up an MCP server with a tool and prompt, connectable via clients like Claude Desktop.

A2A Example (using Python A2A SDK):

from a2a import AgentSkill, AgentCard, A2AServer

# Define skill

skill = AgentSkill(

id='hello_world',

name='Returns hello world',

description='Just returns hello world',

tags=['hello world'],

examples=['hi', 'hello world']

)

# Define Agent Card

card = AgentCard(

name='Hello World Agent',

description='A simple hello world agent',

skills=[skill],

service_url='http://localhost:8000'

)

# Agent logic

def hello_world_handler(task):

return "Hello, World!"

# Run server

server = A2AServer(card, {'hello_world': hello_world_handler})

server.run(port=8000)

This creates an A2A server; other agents can discover it via the Agent Card and send tasks.

In the evolving landscape of AI, techniques like Retrieval-Augmented Generation (RAG), Model Context Protocol (MCP), and Agent-to-Agent (A2A) represent foundational advancements in making large language models (LLMs) more capable, interactive, and collaborative. These methods address key limitations of standalone LLMs, such as outdated knowledge, isolation from tools, and lack of multi-entity coordination. Below, we delve into detailed explanations, architectural insights, comparisons, real-world applications, and implementation guidance, expanding on the core concepts introduced earlier. This comprehensive overview incorporates practical considerations, potential challenges, and synergies among these techniques, drawing from established sources and best practices.

In-Depth Explanations of Core Terms

Retrieval-Augmented Generation (RAG)

RAG is a hybrid approach that combines information retrieval with generative AI to produce more accurate and contextually grounded responses. Introduced as a way to mitigate LLM hallucinations—where models generate plausible but incorrect information—RAG works by first querying an external knowledge base (e.g., a vector database) for relevant documents, then feeding these into the LLM’s prompt for generation.

How It Works:

- Retrieval: Embed the user query using models like OpenAI’s text-embedding-3-large and search a vector store (e. g., ChromaDB or FAISS) for similar documents via cosine similarity.

- Augmentation: Inject retrieved content into the prompt, e.g., “Use this context: [retrieved docs] to answer [query].”

- Generation: The LLM (e.g., GPT-4o-mini) processes the augmented prompt to output a response.

Benefits: Improves factual accuracy, handles domain-specific or real-time data, and is cost-effective compared to fine-tuning. For example, in chatbots, RAG can pull from company docs to answer queries accurately.

- Challenges: Retrieval quality depends on embedding models and index freshness; irrelevant docs can dilute responses.

- Related Concepts: Often paired with semantic search or hybrid retrieval (keyword + vector) for better results.

Model Context Protocol (MCP)

MCP is an open-source protocol from Anthropic (released in 2024) designed to standardize how AI agents access external context, including tools, data, and prompts. It acts as a bridge between LLMs and real-world systems, enabling dynamic, secure interactions without custom integrations.

How It Works:

- Architecture: Client-server model where MCP clients (e.g., AI apps like Claude Desktop) connect to MCP servers exposing capabilities.

- Core Components:

- Tools: Executable functions (e.g., API calls).

- Prompts: Templates for guiding LLM behavior.

- Resources: Data sources like files or databases.

- Protocol Flow: Clients discover capabilities via list_tools(), invoke via call_tool(), and handle responses in real-time (supports HTTP/SSE for streaming).

Benefits: Promotes interoperability, security (e.g., OAuth), and modularity. Early adopters like Block and Zed use it for agentic coding and data access.

- Challenges: Primarily local-first; remote integrations require additional setup. It’s complementary to protocols like A2A for broader ecosystems.

- Related Concepts: Often used with ReAct (Reasoning + Acting), where agents reason before invoking MCP tools.

Agent-to-Agent (A2A)

A2A is Google’s 2025 open protocol for enabling AI agents to communicate and collaborate across frameworks and vendors. It treats agents as interoperable services, allowing task delegation in multi-agent systems.

How It Works:

- Architecture: HTTP-based with JSON-RPC for requests. Agents expose “Agent Cards” (JSON metadata at /.well-known/agent.json) for discovery.

- Core Components:

- Tasks: Stateful units of work (e.g., “book flight”) with lifecycles (submitted → completed).

- Messages/Artifacts: Exchange data in modalities like text or JSON.

- Skills: Defined capabilities (e.g., “analyze data”) with input/output specs.

- Protocol Flow: Client agent sends task/send to remote agent, which processes and streams updates via SSE.

Benefits: Vendor-neutral (supported by 50+ partners like MongoDB), scalable for enterprise (e.g., CRM coordination), and modality-agnostic.

- Challenges: Network-dependent; coordination in controversial tasks (e.g., ethical AI debates) requires careful design to balance viewpoints.

- Related Concepts: Contrasts with ACP (local, low-latency focus) but integrates with MCP for tool access during collaboration.

Other Related Terms

- ReAct: A prompting technique where agents “reason” (think step-by-step), “act” (use tools), and iterate. Often combined with MCP for action loops.

- ACP (Agent Communication Protocol): A local-first alternative to A2A, suited for edge devices (e.g., robotics) with low-latency IPC.

- Agentic AI: Broad term for autonomous agents; RAG, MCP, and A2A enable this by adding retrieval, tools, and collaboration.

Detailed Comparisons and Synergies

While RAG, MCP, and A2A address LLM limitations, they differ in scope and application:

| Aspect | RAG | MCP | A2A | ReAct |

|---|---|---|---|---|

| Goal | Augment generation with knowledge | Connect agents to tools/data | Enable agent collaboration | Iterative reasoning + acting |

| Communication Pattern | Internal (retriever → LLM) | Client-server (agent → tool) | Peer-to-peer (agent → agent) | Loop within single agent |

| Discovery Mechanism | Vector similarity search | list_tools() | Agent Cards | N/A (prompt-based) |

| Standardization | Implementation-specific | Open protocol (Anthropic) | Open protocol (Google) | Prompting technique |

| Use in Controversial Topics | Balances views via diverse sources | Tool access for verification | Collaboration for multi-perspective analysis | Reasoning to evaluate biases |

- Synergies: In a multi-agent system, A2A could delegate retrieval to a RAG-specialized agent, which uses MCP to access tools like databases. ReAct enhances individual agents within this setup.

- When to Choose: Use RAG for info-heavy queries, MCP for single-agent automation, A2A for team-based tasks. For balanced views on debated topics (e.g., AI ethics), combine with diverse source retrieval.

Real-World Applications and Case Studies

- RAG in Practice: Used in chatbots (e.g., enterprise search on internal docs) or research tools. Example: Summarizing PDFs by retrieving chunks and generating insights.

- MCP in Practice: In IDEs like Cursor for code reviews (fetching repo data) or assistants like Claude for calendar checks.

- A2A in Practice: Multi-agent workflows, e.g., a travel planner agent (A2A) delegates flight booking to a specialized agent, using MCP for API access.

- Combined Example: An AI customer service system where RAG retrieves FAQs, MCP integrates CRM tools, and A2A coordinates between query-handling and escalation agents.

Advanced Implementation with Python Code

Building on basic examples, here’s an integrated system using all three.

Integrated RAG + MCP + A2A Example (Hypothetical multi-agent setup with LangChain for RAG, FastMCP for MCP, and A2A SDK):

# RAG Component (as before)

# ...

# MCP Server Setup

from fastmcp import FastMCP

mcp = FastMCP("Integrated Server")

@mcp.tool("fetch_data")

async def fetch_data(query: str) -> str:

return "Data fetched: " + query # Simulate tool

# A2A Agent Setup

from a2a import AgentSkill, AgentCard, A2AServer

skill = AgentSkill(id='integrated_task', name='Handle integrated task', description='Uses RAG, MCP')

card = AgentCard(name='Integrated Agent', skills=[skill], service_url='http://localhost:8000')

def handler(task):

# Invoke RAG

rag_response = chain.invoke(task['query'])

# Invoke MCP tool

mcp_response = fetch_data(task['query'])

return f"RAG: {rag_response}, MCP: {mcp_response}"

server = A2AServer(card, {'integrated_task': handler})

server.run(port=8000)

Run the server; other A2A agents can delegate tasks here, leveraging RAG for knowledge and MCP for tools.

For math problems (closed-ended), e.g., solving quadratic equations via ReAct + code tool:

- Reasoning: Prompt agent to reason step-by-step, generate code (e.g., using sympy), execute via tool, verify.

- Solution: For ax² + bx + c = 0, roots = [-b ± sqrt(b² - 4ac)] / 2a. Code:

import math; discriminant = b**2 - 4*a*c; root1 = (-b + math.sqrt(discriminant)) / (2*a); ...

Potential Challenges and Best Practices

- Uncertainty Handling: Hedge on controversial topics (e.g., “Evidence suggests… but views differ”).

- Security: Use authentication in MCP/A2A; validate retrieval in RAG.

- Scalability: Combine for agentic workflows; monitor with tools like LangSmith.

- Future Outlook: As AI evolves, these may integrate further, enabling fully autonomous systems.

This survey provides a self-contained guide, ensuring you can implement and adapt these techniques effectively.