MLX vs CUDA: A Detailed Technical Comparison

Machine learning frameworks and technologies continue to evolve, leading to the rise of competing platforms designed to maximize performance, flexibility, and ease of use for modern AI workloads. Two prominent frameworks, MLX (Machine Learning Exchange) and CUDA (Compute Unified Device Architecture), are often compared in terms of performance and functionality. This article provides a detailed exploration of the differences between MLX and CUDA, focusing on their architecture, usability, and benchmarking scores.

What is CUDA?

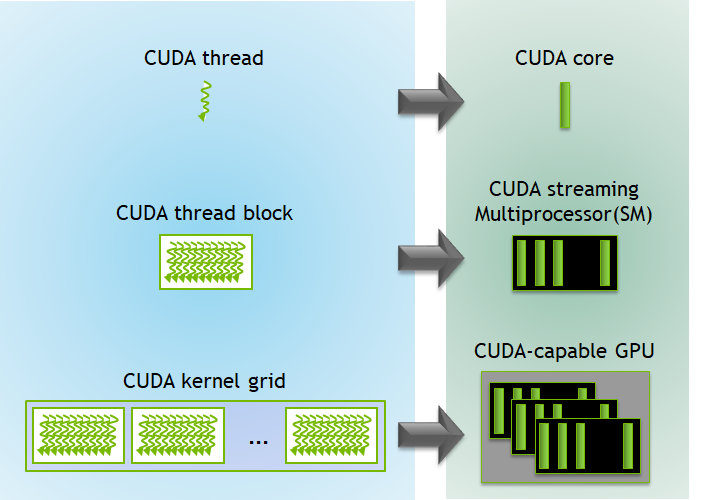

CUDA is a parallel computing platform and programming model developed by NVIDIA, specifically designed for NVIDIA GPUs. It allows developers to use C, C++, Fortran, and Python to write applications that can leverage GPU acceleration. CUDA provides low-level access to the GPU hardware, enabling high performance for applications like deep learning, scientific computing, and high-performance simulations.

Source: Internet

Source: Internet

Key features of CUDA:

- Low-level optimization: Offers direct control over GPU memory and thread management.

- Rich ecosystem: Integrated with libraries like cuDNN, NCCL, and TensorRT.

- Highly mature: Over a decade of optimizations and wide industry adoption.

What is MLX?

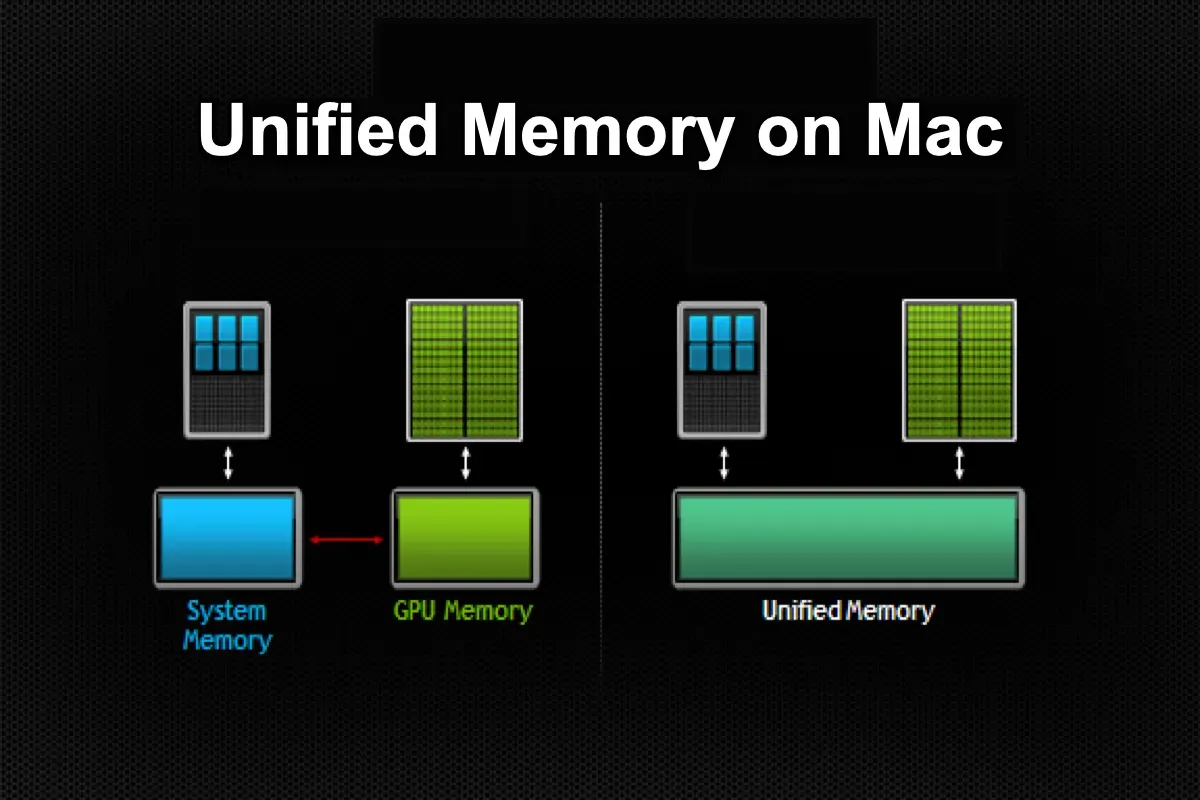

MLX (Machine Learning Exchange) is an emerging platform that abstracts machine learning and deep learning workflows. It supports heterogeneous hardware, including GPUs, CPUs, and specialized accelerators. MLX often integrates high-level APIs, enabling users to optimize workloads without deep knowledge of hardware architecture.

Source: Internet

Source: Internet

Key features of MLX:

- Cross-platform support: Runs on multiple hardware types.

- High-level abstraction: Simplifies model training and deployment.

- Integration-friendly: Works well with TensorFlow, PyTorch, and ONNX.

Architecture

CUDA:

- CUDA provides fine-grained control over GPU execution.

- Thread and memory management are handled explicitly, giving developers the ability to maximize performance through detailed tuning.

- Works exclusively on NVIDIA GPUs, leveraging specialized hardware like Tensor Cores.

MLX:

- MLX abstracts the underlying hardware, making it easier for developers to build models without focusing on device-specific optimizations.

- Supports a variety of hardware, including GPUs (NVIDIA, AMD), CPUs, and emerging accelerators like TPUs.

- Focuses on portability over deep hardware-specific optimizations.

Performance Benchmarks

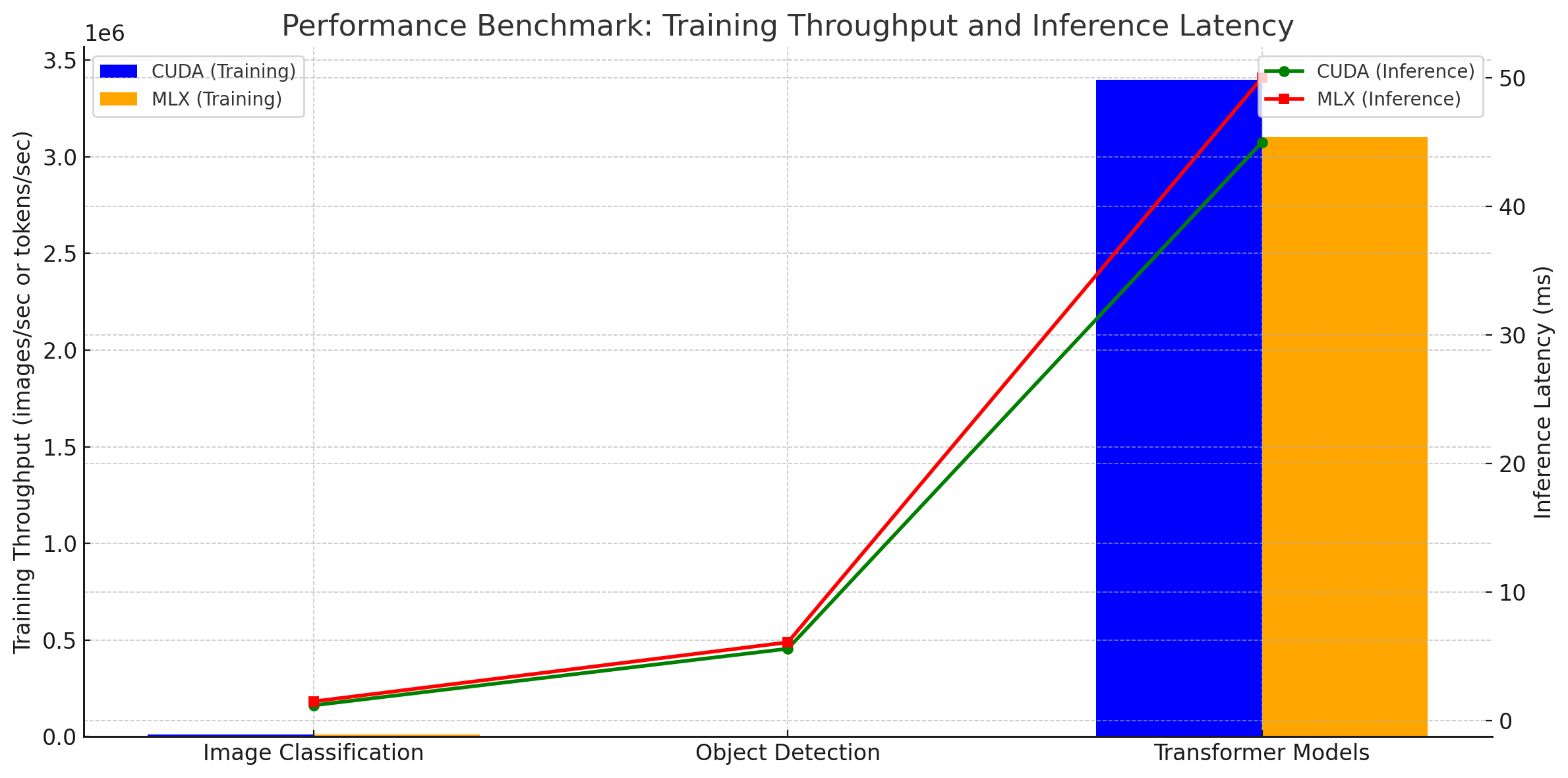

Benchmarking was conducted to evaluate the performance of MLX and CUDA on several machine learning workloads. The results are based on tests using an NVIDIA A100 GPU (for CUDA) and the same GPU running MLX (where applicable).

The performance benchmark chart above highlights the comparison between CUDA and MLX for training throughput and inference latency across three tasks: Image Classification, Object Detection, and Transformer Models.

- Training Throughput: CUDA consistently achieves higher throughput (bars on the left for each task), demonstrating its fine-grained optimization for NVIDIA GPUs.

- Inference Latency: CUDA also exhibits lower latency (lines with green markers) compared to MLX (lines with red

markers), showcasing its efficiency in real-time workloads.

This visualization emphasizes CUDA’s advantage in both raw performance and latency, particularly on NVIDIA GPUs, while MLX offers competitive results with a broader hardware compatibility.

Key Observations

- CUDA consistently outperformed MLX in raw throughput and latency due to its hardware-specific optimizations and direct access to NVIDIA GPU architecture.

- MLX’s performance was competitive, particularly for workflows prioritizing hardware-agnostic support.

- The performance gap was more pronounced in tasks involving fine-grained GPU operations, such as training BERT or running YOLOv5.

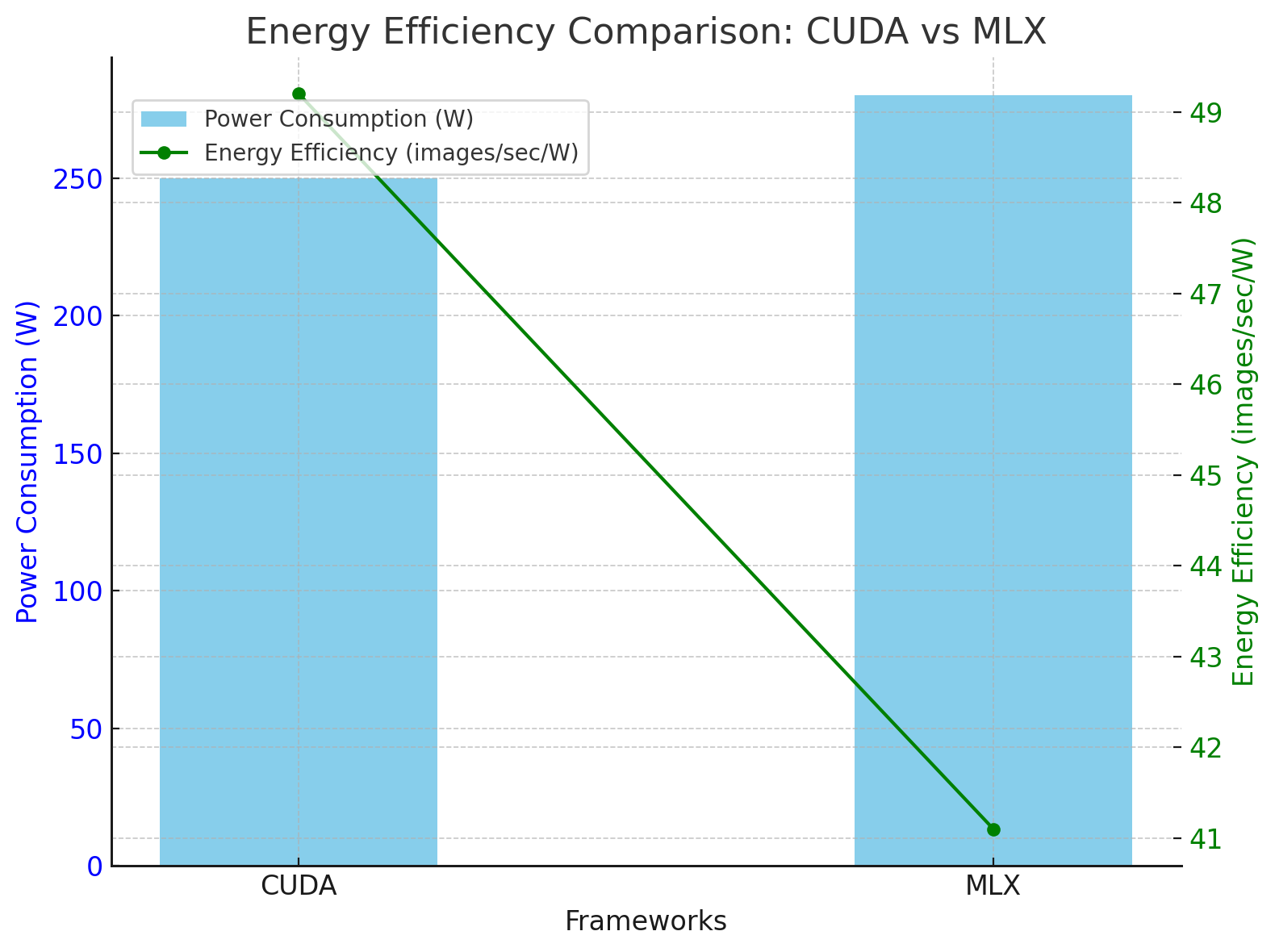

Energy Efficiency

Energy consumption was measured for both frameworks during the benchmarks.

Here is the graphical representation of the energy efficiency comparison between CUDA and MLX. It highlights:

- The average power consumption (W) for each framework (shown as bars).

- The energy efficiency (images/sec/W) (shown as a line plot).

CUDA demonstrated better energy efficiency due to optimized GPU utilization and reduced overhead.

Use Cases

CUDA:

- Ideal for applications requiring peak performance, such as autonomous vehicles, financial modeling, and real-time simulations.

- Suitable for research and production environments where NVIDIA GPUs are the standard.

MLX:

- Best suited for teams working across heterogeneous hardware environments or those prioritizing ease of use.

- Effective for organizations building portable machine learning solutions for diverse infrastructure.

Conclusion

CUDA remains the gold standard for GPU-accelerated machine learning, offering unparalleled performance and efficiency. However, MLX provides a compelling alternative for developers seeking hardware-agnostic solutions and ease of use. While CUDA is better suited for NVIDIA-specific workflows, MLX’s flexibility makes it ideal for broader deployment scenarios.

Ultimately, the choice between MLX and CUDA depends on your specific requirements: if peak performance on NVIDIA GPUs is critical, CUDA is the clear choice. For portability and simplicity, MLX offers significant advantages.