Using Explainable AI (XAI) in Fintech

Introduction to Explainable AI (XAI)

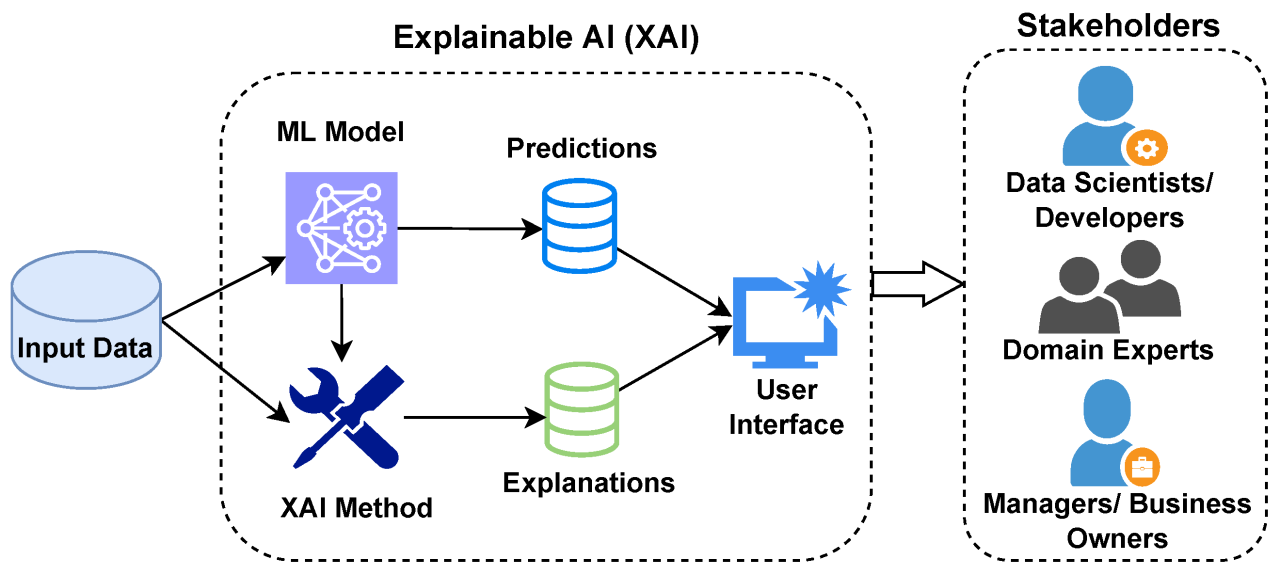

Explainable AI (XAI) refers to the subset of artificial intelligence focused on making the decisions and predictions of AI models understandable and interpretable to humans. As AI systems grow in complexity, particularly with the use of deep learning, their “black-box” nature poses challenges in trust, accountability, and regulatory compliance. XAI techniques aim to bridge this gap by providing insights into how AI models make decisions.

Source: Internet

Source: Internet

Key Components of XAI

Model Interpretability:

- Ability to understand the inner workings of an AI model.

- Examples: Decision trees, linear regression, and simple neural networks are inherently interpretable.

Post-Hoc Explanations:

- Techniques that explain the decisions of black-box models without altering their architecture.

- Examples: LIME (Local Interpretable Model-Agnostic Explanations), SHAP (SHapley Additive exPlanations).

Feature Importance Analysis:

- Quantifying the contribution of each feature to a model’s prediction.

Counterfactual Explanations:

- Offering hypothetical scenarios that show how changes in input features could alter the outcome.

Visualization Tools:

- Tools such as saliency maps, partial dependence plots, and heatmaps that help visualize model behavior.

Implementation of XAI in Fintech

Fintech, characterized by high stakes and stringent regulatory environments, offers fertile ground for XAI adoption. Here’s how XAI can be implemented:

Credit Scoring and Loan Approvals

- Current Challenge: Customers and regulators demand transparency in how creditworthiness is evaluated.

- XAI Application: Use SHAP or LIME to explain which features (e.g., income, credit history, spending patterns) most influenced a loan approval or denial.

- Implementation: Integrate these explanations into user-facing dashboards for customer clarity and internal audit purposes.

Fraud Detection

- Current Challenge: Traditional fraud detection algorithms are opaque, leading to difficulties in understanding false positives/negatives.

- XAI Application: Deploy anomaly detection models with explainability layers, highlighting specific transaction attributes (e.g., unusual location, time, or amount) responsible for flagging a transaction.

- Implementation: Combine explainability with real-time alerts to reduce investigation times and enhance trust.

Investment Advisory

- Current Challenge: Robo-advisors often use complex algorithms for portfolio optimization, which users might not fully trust.

- XAI Application: Explain allocation decisions by breaking down the influence of market trends, risk tolerance, and user preferences.

- Implementation: Include visual and textual explanations in advisory reports, enabling better customer understanding.

Regulatory Compliance and Auditing

- Current Challenge: Compliance with laws like GDPR and the EU’s AI Act requires understanding AI decision-making.

- XAI Application: Provide detailed audit trails and explanations of decisions to demonstrate adherence to regulations.

- Implementation: Develop frameworks for ongoing monitoring and documentation of AI behavior.

Customer Service Chatbots

- Current Challenge: Chatbots driven by AI can sometimes provide inconsistent or unclear responses.

- XAI Application: Enhance chatbot transparency by showing the reasoning behind responses, such as past interactions or keyword significance.

- Implementation: Integrate explainability modules into chatbot systems to increase user satisfaction and trust.

Scope of XAI in Fintech Over the Next Few Years

Enhanced Trust and Adoption:

- As financial institutions increasingly adopt AI, explainability will become a differentiator for building customer trust.

- Regulators will likely mandate XAI integration to ensure transparency and fairness.

Technological Advancements:

- Emerging XAI tools will offer deeper insights with lower computational overhead.

- Hybrid models combining interpretability and high performance will gain traction.

Personalized Financial Services:

- With XAI, fintech companies can deliver highly personalized services while ensuring that users understand the logic behind recommendations.

Stronger Regulatory Compliance:

- XAI will play a crucial role in satisfying evolving regulatory requirements, particularly in regions emphasizing ethical AI use.

Integration with Blockchain:

- XAI can complement blockchain technology in fintech, offering transparency in both data lineage and AI-driven decision-making.

Risk Management and Fairness:

- By identifying biases and vulnerabilities in models, XAI will enhance risk management and promote equitable AI systems.

Conclusion

The intersection of XAI and fintech holds immense potential for revolutionizing financial services. By making AI more transparent, interpretable, and accountable, fintech companies can address key challenges around trust, fairness, and compliance. Over the next few years, the adoption of XAI will likely become a critical factor in driving innovation and maintaining competitiveness in the fintech industry.