In June 2017, eight researchers from Google Brain and Google Research published a paper that would fundamentally reshape artificial intelligence. Titled “Attention Is All You Need,” it introduced the Transformer architecture—a model that discarded the conventional wisdom of sequence processing and replaced it with something elegantly simple: pure attention.

The numbers tell the story. As of 2025, this single paper has been cited over 173,000 times, making it one of the most influential works in machine learning history. Today, nearly every large language model you interact with—ChatGPT, Google Gemini, Claude, Meta’s Llama—traces its lineage directly back to this architecture.

But here’s what makes this achievement remarkable: it wasn’t about adding more layers, more parameters, or more complexity. It was about removing what had been considered essential for decades.

The Problem: Sequential Processing

Why RNNs Were Dominant (And Problematic)

Before 2017, the dominant approach for sequence tasks used Recurrent Neural Networks (RNNs), particularly Long Short-Term Memory (LSTM) networks and Gated Recurrent Units (GRUs). The idea was intuitive: process sequences one element at a time, maintaining a hidden state that captures information from previous steps.

Think of it like reading a book word by word, keeping a mental summary as you go.

The Fundamental Bottleneck: RNNs have an inherent constraint—they must process sequentially. The output at step t depends on the hidden state h_t, which depends on the previous state h{t-1}, which depends on h{t-2}, and so on. This creates an unbreakable chain.

From the paper:

Read on →“Recurrent models typically factor computation along the symbol positions of the input and output sequences. Aligning the positions to steps in computation time, they generate a sequence of hidden states h_t, as a function of the previous hidden state h_{t-1} and the input for position t.”

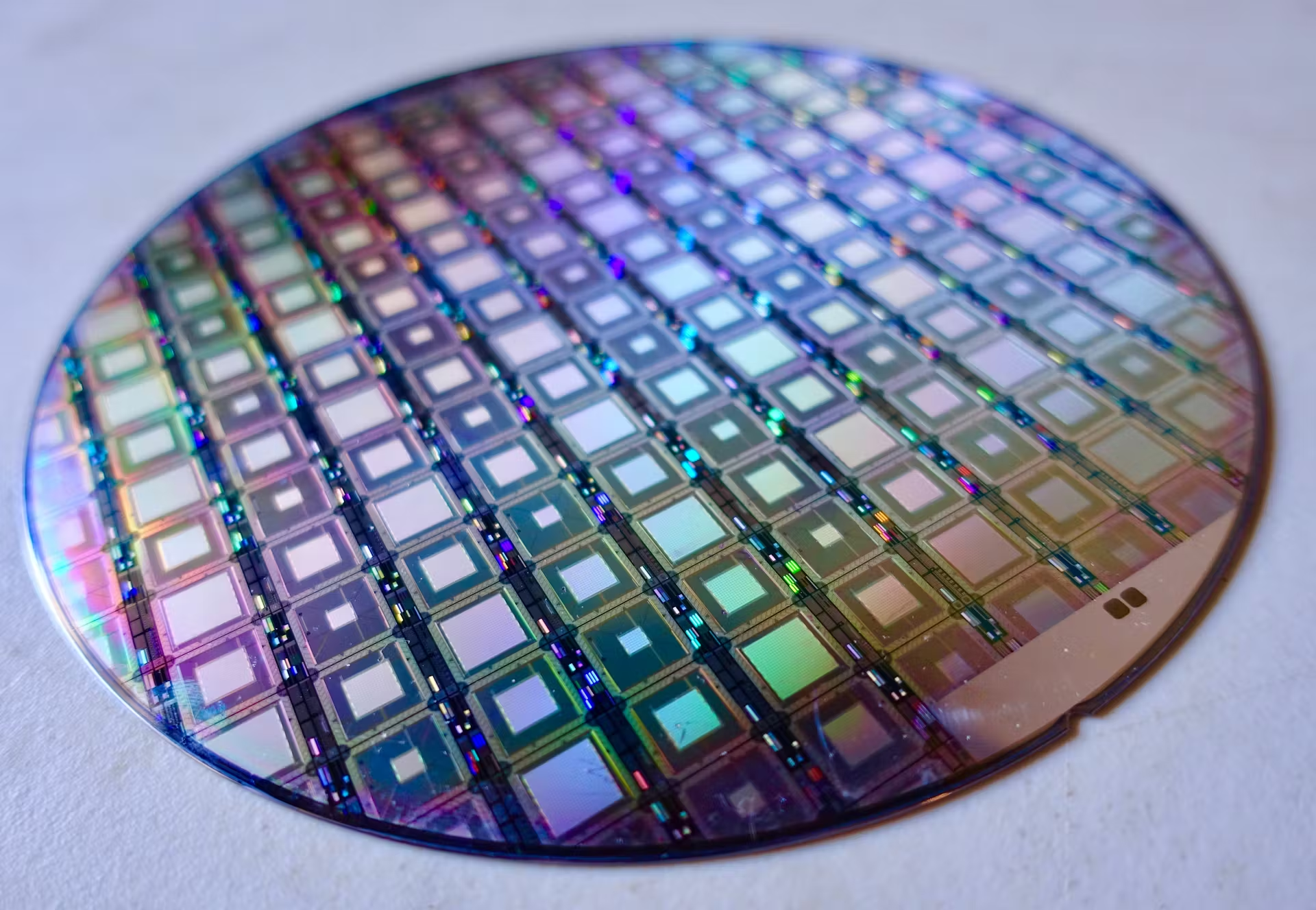

Source: Internet

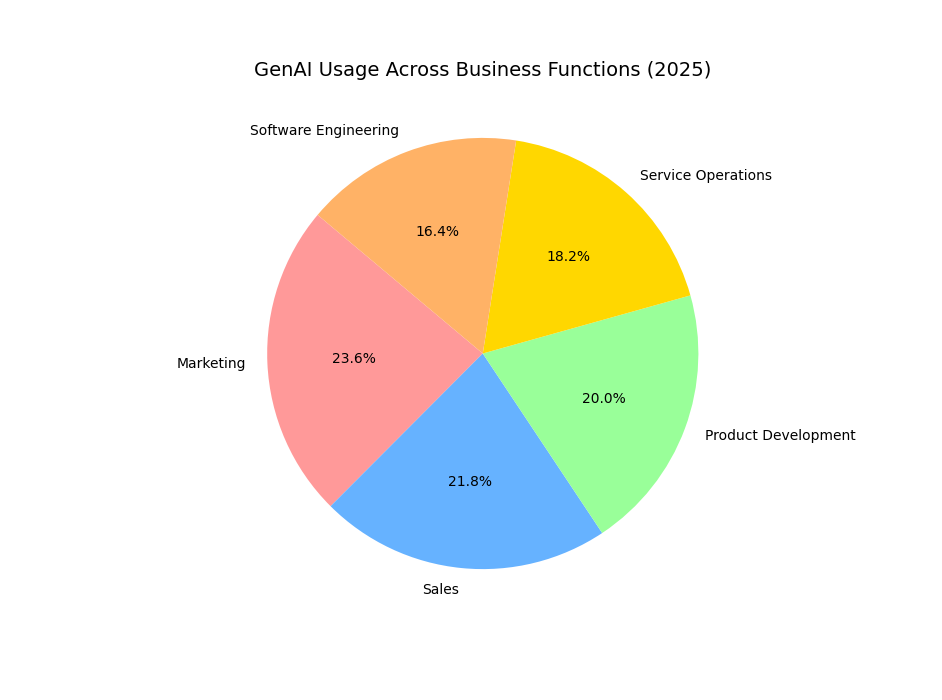

Source: Internet Source: Generated by Matplotlib

Source: Generated by Matplotlib Source: Internet

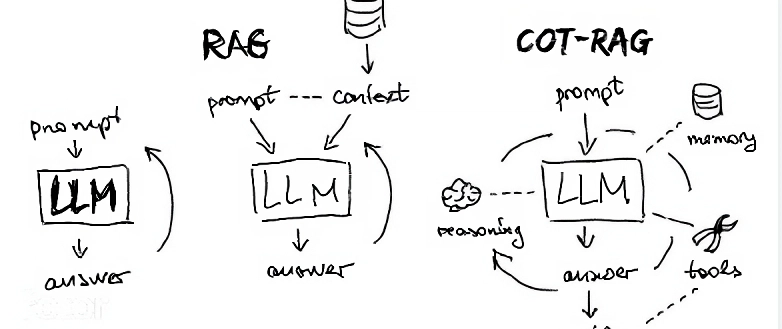

Source: Internet Source: Internet

Source: Internet